Monday, August 11, 2025

Building a Visual Agent Workflow Builder: From Visual Composition to AI companion agents to know your systems

Madhuri Ganta is a frontend developer at ContextDX, building user interfaces with React and Next.js. She also works on cloud infrastructure using AWS CDK. Based in the UK.

A visual orchestration tool that generates type-safe agentic workflows for architecture intelligence

The Vision: Architects Building AI Workflows Visually

We wanted to democratize AI workflow creation for software architects, business teams and other stakeholders, but in the context of "providing architecture intelligence" and automating most mundane tasks. Users should be able to compose intelligent agents that understand their specific domain needs—either through automatic generation during workspace interactions or through visual customization when needed.

"The challenge? Different teams need different ways of extracting architecture intelligence. Some might want to monitor Slack channels and diagrammatically depict the outcome of discussions. Others research markets and enhance architecture to respond to market demands. Or assess technical debt."

What We Built: An adaptive graph builder

Our platform automatically generates intelligent agents on-the-fly as customers begin using our architecture intelligence platform. As users create and interact with architectural workspaces, the system hydrates them with purpose-built AI agents tailored to their specific context and needs as they interact with their boards and workspaces. When it can't successfully build on its own, it allows you to edit your domain-specific flows and evaluate them—in the most type-safe way possible.

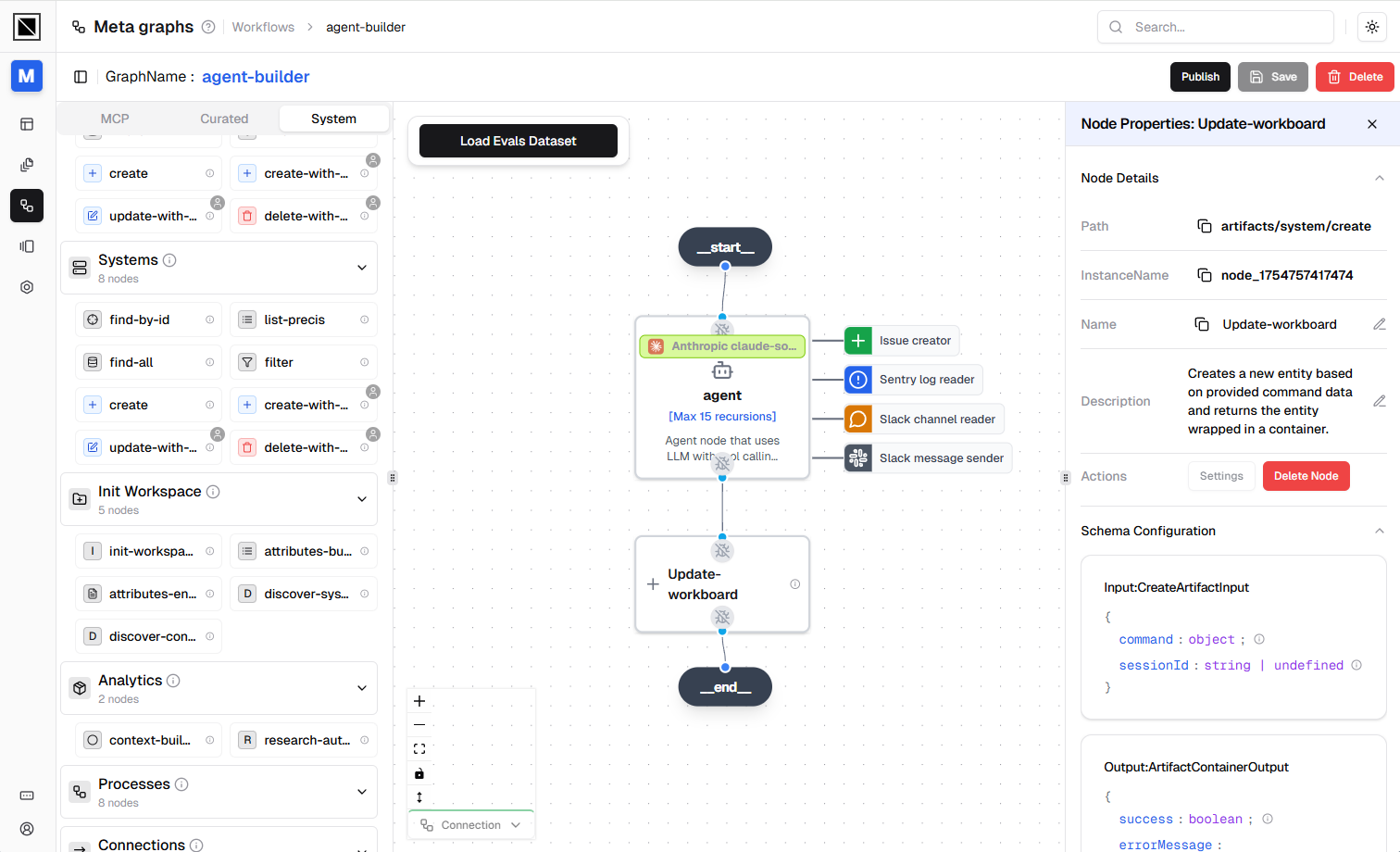

While agents are created automatically during workspace setup, users can edit, test, or manually build new ones using our Agent Builder—all in a completely type-safe manner through an intuitive visual interface.

Automatic Agent Generation

- Context-aware creation during workspace hydration based on user's architecture patterns and team structure

- Intelligent workflow composition automatically selected based on architecture analysis scope and team roles

Manage dynamically created Agents

- Visual editing interface for refining auto-generated agents to fine-tune behavior for specific use cases

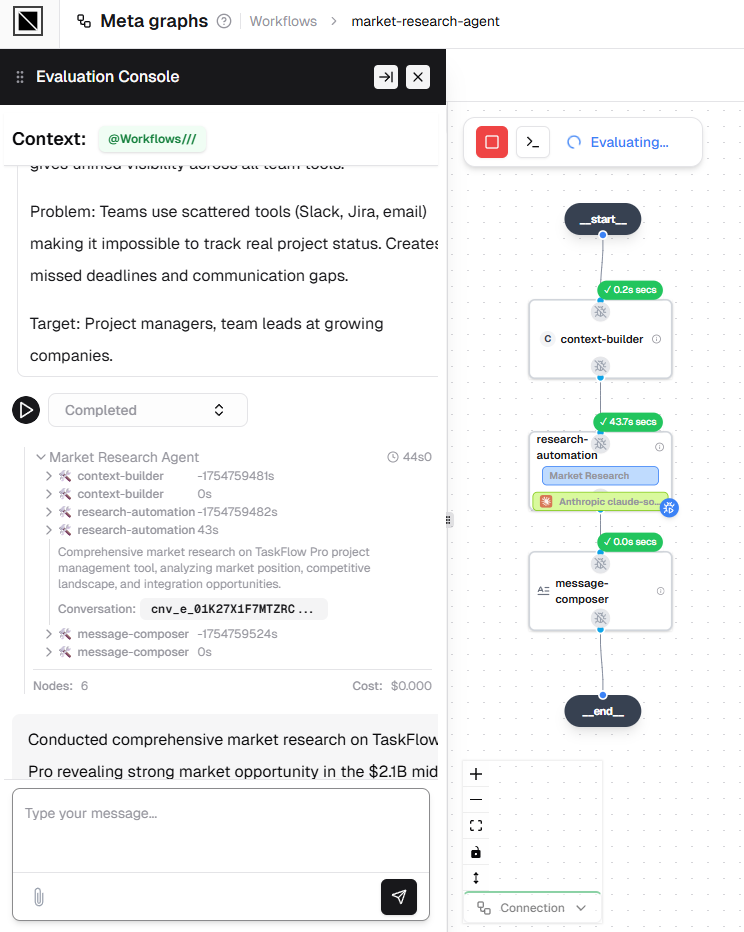

- Comprehesnive evals layer where users can validate agent logic to verify the steps take reach a particular conclusion

- Manual agent editing Enables human control when agents failed to create. for example, if any dependency could not be resolved

Agent evaluation interface displaying workflow execution steps and internal reasoning traces to audit decision-making processes and verify conclusion pathways.

Agent evaluation interface displaying workflow execution steps and internal reasoning traces to audit decision-making processes and verify conclusion pathways.Tooling of future

- Component-based composition for building agent workflows by connecting nodes representing tools, data sources, and logic

- MCP Ready In addition nodes for API calls, data processing, AI analysis, and human review points, one can evaluate and seamlessly bind MCP tools.

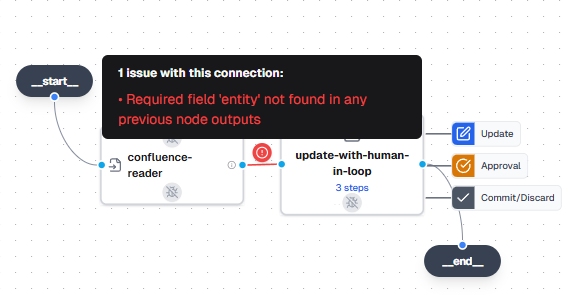

- Real-time validation feedback with instant issue highlighting as you compose workflows

- Type-safe connections ensuring data compatibility across the workflow at design time

Screenshot illustrating type-safe connections between workflow nodes in the visual builder.

Screenshot illustrating type-safe connections between workflow nodes in the visual builder.From Visual to Executable

When users click "Deploy," the visual workflow compiles into a LangGraph graph executed efficiently on our Node.js backend. The builder validates input/output types for each node, automatically infers schemas when integrating dynamic APIs, and handles MCP-compatible tool integration seamlessly.

"The magic happens when workspace interactions automatically spawn intelligent agents—users get purpose-built AI systems without manual construction, yet can always customize through visual composition when needed."

Real-World Use Cases: Automatic Agent Intelligence

Slack Intelligence Integration

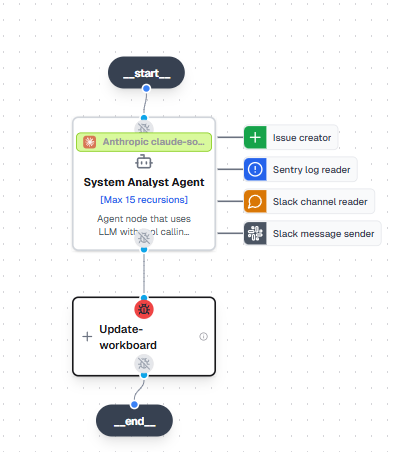

Teams monitoring Slack channels for architectural discussions can automatically generate agents that parse conversations, extract key decisions, and create visual diagrams showing the evolution of architectural thinking. Such a custom agent understands context from previous discussions and can identify when decisions contradict earlier choices.

Market-Responsive Architecture

Architecture teams researching market trends can spawn agents that continuously monitor industry patterns, competitor analysis, and technology adoption rates. Such a custom agent automatically suggests architectural enhancements to respond to market demands, highlighting opportunities for competitive advantage.

Technical Debt Assessment

Development teams can automatically generate agents that analyze codebases, track dependency health, and assess technical debt accumulation. Such a custom agent understands team-specific patterns and can prioritize debt reduction efforts based on actual impact on development velocity.

Visual graph showing agent nodes and connections for architecture intelligence workflow.

Visual graph showing agent nodes and connections for architecture intelligence workflow.For the technical implementation details of how these visual workflows compile to type-safe LangGraph executions, see our companion post on building production-ready agentic workflows.